(I also installed nvidia-docker2 on the server before adding this line to the jupyterhub-config, but I am not sure if this was necessary and did any contribution to this solution.

RUN echo "/usr/local/nvidia/lib" > /etc/ld.so.conf.d/nf & \Įcho "/usr/local/nvidia/lib64" > /etc/ld.so.conf.d/nfĮNV PATH /usr/local/nvidia/bin:/usr/local/cuda/bin:$Īfter adding this line to the jupyterhub_config.py and restarting the jupyterhub service, all GPUs were visible wihtn the container and nvidia-smi showed all avaliable GPU-devices as expected. # For libraries in the cuda-compat-* package:

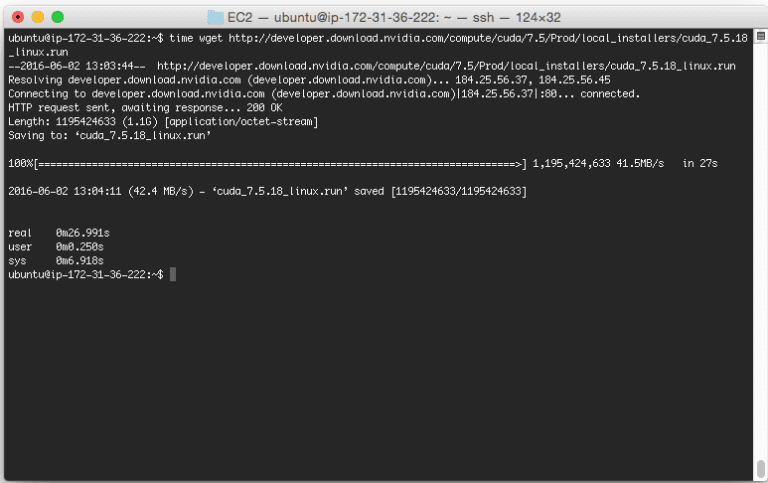

#Cuda toolkit docker install

RUN apt-get update & apt-get install -y -no-install-recommends \Įcho "deb /" > /etc/apt//cuda.list & \Įcho "deb /" > /etc/apt//nvidia-ml.list & \ĮNV CUDA_PKG_VERSION 10-1=$CUDA_VERSION-1 Install CUDA on WSL2, Run the following commands by CUDA on WSL User Guide set default WSL engine to WSL2 C:\> wsl.exe. | N/A 34C P0 27W / 250W | 0MiB / 16280MiB | 0% Default | After installation of Ubuntu, enable the WSL integration for Docker 6. | Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. | GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. Here ist the info for the GPUs: nvidia-smi This means, only max 4 containers run with GPU enables (each one of the Teslas) and the other containers have no GPU. We have 4 Tesla-GPUS, thet we want to enable within 4 docker-containers on the jupyterhub. Systemuser spawner, as we want to enable to access to home directories of the users that are logged into jupyterhub The following nvidia packages are installed on the headles server:ĭocker-ce-cli 5:19.03.2~3-0~ubuntu-bionic We have installed jupyterhub version 1.0.0 For our appication we use a kernel/env other than “base”. These evironments are accessible within the jupyter notebook through different installed kernels, that one may select when running the notebook. Miniconda 4.7.10 on a each user base (under each user dir a separate installation, so that each use has his own environments asf.) Maybe it is important to say, that I have different conda environments installed on the host for the uses which spawns the container. We are using a headless Ubuntu server 18.0.4 LTS. We cannot access a GPU in our docker container spawned with jupyterhub… 1.) The situation:

0 kommentar(er)

0 kommentar(er)